Pseudorandom numbers and linear congruential genrators

Exploring pseudorandom number generators.

Pseudorandom number generators (PRNGs) are algorithms for generating sequences of numbers, that appear — with regard to some chosen special qualities — to have been chosen at random.

People implementing simulations and other software, have for a long time faced a fundamental choice when trying to acquire random numbers: Whether to rely on a physical process, or to use mathematical algorithm.

A physical process, while random, may have unknown and undesired properties. A mathematical one on the the other hand is not truly random at all, but its properties may be better known.

Any one who considers arithmetical

methods of producing random digits

is, of course, in a state of sin.

John Von Neumann, 1951.

The above famous quote is easy to misinterpret. It’s often cited without its less known passage:

[It is] a problem that we suspect of being solvable by some rigorously defined sequence.

Von Neumann needed randomness for Monte Carlo simulation and relied on a mathematical process.

The need for random numbers grew in the first half of the 20’th century. In 1955 the RAND corporation even published a 400 page book titled A Million Random Digits with 100,000 Normal Deviates. An amusing read, undoubtedly.

Since then, many algorithms have been devised for generating pseudorandom numbers. Below, we’ll derive the origins for an elemental one.

The equidistribution theorem

The equidistribution theorem states that, for an irrational constant , the sequence

is uniformly distributed.

Shifting the sequence with a rational constant retains this property. In other words, for a non negative integer, , the sequence:

produces uniformly distributed values in the interval .

Note, that since

we can expand and rewrite the recurrence as:

Substituting for gives us a recurrence relation:

This is the starting point of our PRNG.

A geometric interpretation

An interesting geometric interpretation for our sequence is presented in the book Fourier Analysis: An Introduction1:

Suppose that the sides of a square are reflecting mirrors and that a ray of light leaves a point inside the square. What kind of path will the light trace out?

…

the reader may observe that the path will be either closed and periodic, or it will be dense in the square. The first of these situations will happen if and only if the slope of the initial direction of the light (determined with respect to one of the sides of the square) is rational.

To adapt the quote to our notation, replace with and consider .

Here’s an Python implementation of simulating a ray bouncing in a square:

import matplotlib.pyplot as plt

import numpy as np

from numpy import array as vec2

from numpy.typing import NDArray as Vec2

from matplotlib.figure import Figure, Axes

from math import sqrt

EPSILON = np.finfo(np.float64).eps

SIZE = 10

def is_zero(a: np.float64) -> bool:

return np.abs(a) < EPSILON * 2

def check_intersection(p: Vec2, step: Vec2, v: Vec2, s=10) -> tuple[np.number, Vec2, Vec2] | None:

"""Check intersection between a vector (step) with origin at point (p),

and a square with a side of length (s)."""

planes = (

# tuples of [point on plane, normal, label]

(vec2([0, s]), vec2([0, -1]), "top"),

(vec2([s, 0]), vec2([-1, 0]), "right"),

(vec2([s, 0]), vec2([0, 1]), "bottom"),

(vec2([0, s]), vec2([1, 0]), "left"),

)

result = None

for plane, normal, _ in planes:

# disregard impossible hits along normal or with zero step

if np.all(is_zero(step)) or step.dot(normal) == 0:

continue

# length along v

l = (plane - p).dot(normal) / step.dot(normal)

# disregard behind or far away

if l < 0 or l > 1:

continue

# disregard hit on edge if facing inwards

if is_zero(l) and v.dot(normal) > 0:

continue

# store closest hit

if result is None or result[0] > l:

p_hit = p + l * step

result = l, p_hit, normal

return result

def handle_intersection(l: np.number, p_hit: Vec2, n: Vec2, step: Vec2, v: Vec2):

"""Handle intersection at point (p_hit) at speed (v) and distance (step * l)

with normal (n)"""

# calculate remaining step from reflected penetation

penetration = (1 - l) * v

step = penetration - 2 * penetration.dot(n) * n

# reflect velocity along normal

v = v - 2 * v.dot(n) * n

return p_hit, step, v

def calculate(p: Vec2, v: Vec2, steps: int, size: int) -> tuple[Vec2, Vec2]:

"""Calculate sequence of points for a ray from point (p) with velocity (v)

bouncing in a square with side of length (size)"""

points = [p]

ray = [p]

for _ in range(steps - 1):

step = v

while intersection := check_intersection(p, step, v, size):

l, p_hit, n = intersection

p, step, v = handle_intersection(l, p_hit, n, step, v)

ray.append(p_hit)

p = p + step

points.append(p)

ray.append(p)

is_outside = p[0] < 0 or p[0] > size or p[1] < 0 or p[1] > size

if is_outside:

raise ArithmeticError("error due to numeric precision")

return points, ray

if __name__ == "__main__":

fig, ax = plt.subplots(1, 3, figsize=(8, 6), sharey=True, constrained_layout=True)

plt.setp(ax, xlim=[0, SIZE], ylim=[0, SIZE], box_aspect=1)

# starting point (p), velocity (c)

p = vec2((3.5, 7.5))

c = vec2((1.4, 1))

plots = (

# steps, velocity, fig. axis

( 20, c, ax[0]),

(100, c, ax[1]),

(400, c, ax[2]),

)

for steps, v, ax in plots:

points, ray = calculate(p, v, steps, SIZE)

ax.set(title=f"{steps} steps")

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

ax.annotate("$P$", (p[0], p[1]), ha="right", va="baseline", fontsize=18)

ax.scatter(*np.array(points).T, color="black")

ax.plot(*np.array(ray).T, linewidth=1, color="black", clip_on=False)

fig.suptitle("Bouncing ray\nwith velocity $c=(\\sqrt{2},1)$", fontsize=16, y=0.9)

fig.savefig("reflections.png", bbox_inches="tight", pad_inches=0, dpi=300)

plt.show()

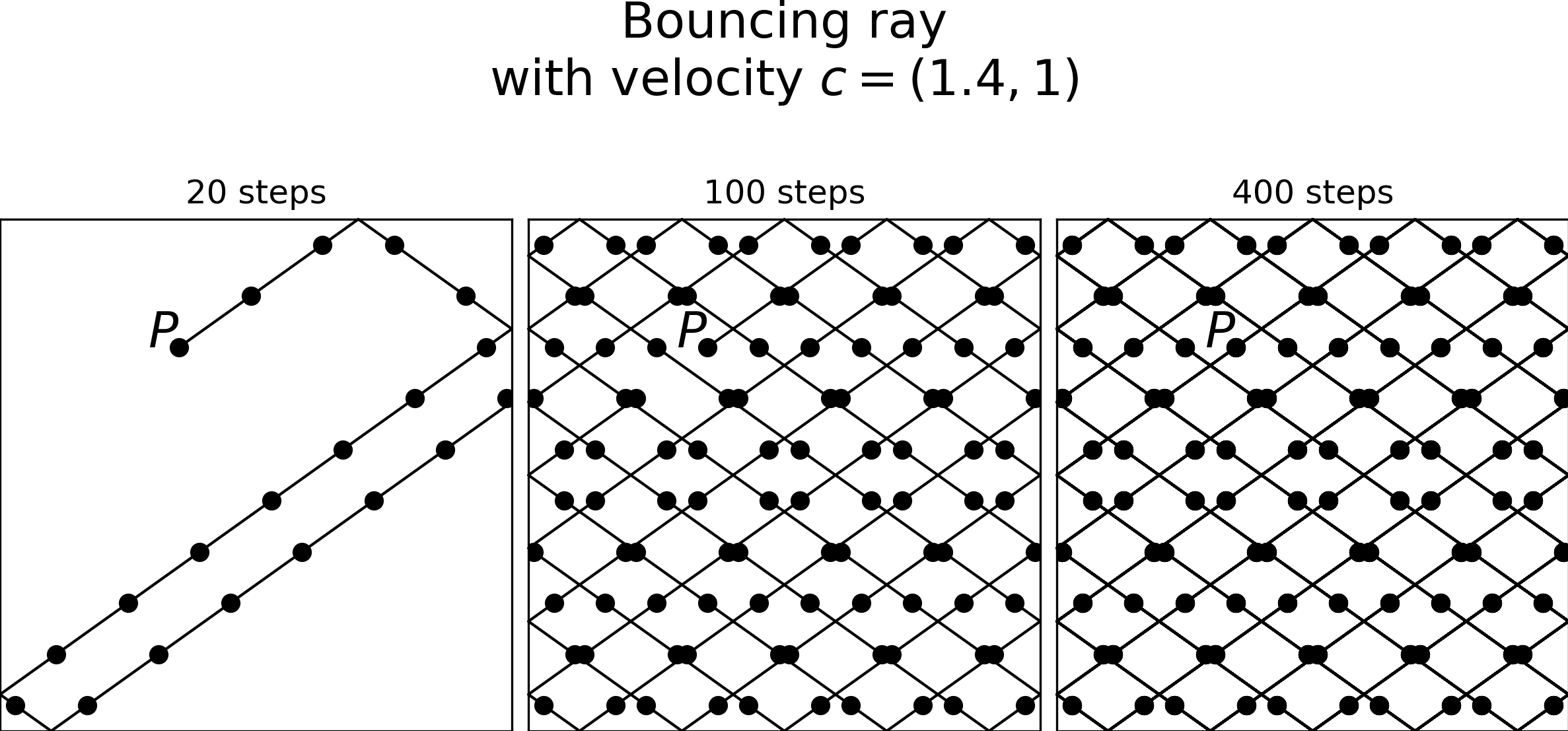

In the above listing, we have defined the square to have sides of length 10. Each iteration, a light ray is advanced by its velocity , and a sample point is recorded. Running the simulation with a rational velocity produces figure 1.

The figure shows an emerging grid pattern. The path of the bouncing ray is closed and has a period of 101 iterations.

What is of interest to us is the distribution of sample points along the x-axis. It’s evident, that the sequence won’t suffice as random enough.

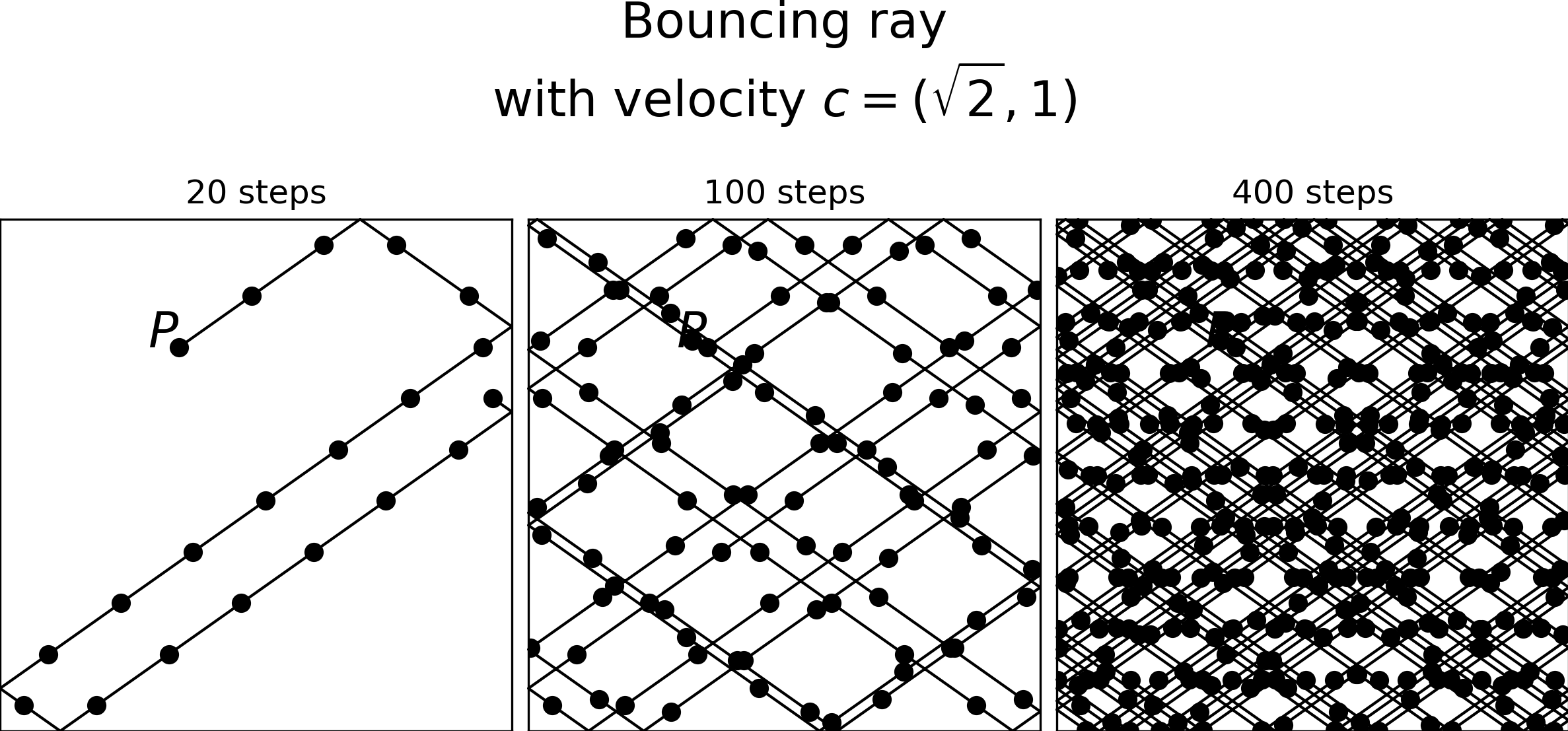

When we change the velocity to an irrational constant, such as c = vec2((sqrt(2), 1)), we get figure figure 2.

This time, the bouncing ray won’t fall into a recurring pattern. The produced sequence is improved in terms of how random it appears visually. This is due to the theoretically infinite period, as predicted by the equidistribution theorem.

I say theoretically, because there’s a catch.

The catch

For the sequence to be usable, the calculations have to be performed with infinite-precision real numbers 2. In practice, evaluating a long sequence on the computers we have today will inevitably face floating-point errors. This means that the properties of our sequence break down, when is large.

To work around this, we’ll need to alter our sequence so that we can implement it on computers with limited floating point precision.

Equation is a special case of a linear congruential generator (LCG). Using LCG’s for pseudorandom number generation dates back to the 1950’s3. Their quality is nowadays regarded as inadequate, but they are interesting for historical reasons4.

The general form of linear congruential generators is defined5 as

where is the multiplier, is the increment and is the modulus. Their resemblence to is clear. We can use known properties of LCG’s to fix our original sequence to work with integers.

Our infinite period (due to the irrational multiplier) has to be replaced with a sufficiently long period .

In The Art of Computer Programming 5, Knuth writes (citing others)

Theorem A.

The linear congruential sequence defined has period length if and only if

- is relatively prime to ;

- is a multiple of , for every prime dividing ;

- is a multiple of 4, if is a multiple of 4.

A further detail is provided in an excercise,

Are the following two conditions sufficient to guarantee the maximum length period, when is a power of 2?

- is odd;

- .

and its answer

Yes, these conditions imply the conditions in Theorem A, since the only prime divisor of is 2, and any odd number is relatively prime to .

Equipped with the above constraints, we can scale equation , and pose it in terms of integers. We then have:

where is a positive integer (usually the word size), is a positive integer of the form and is an odd number.

Exploring sequences

Let’s explore the sequences produced by our generator with some practical constants. With , , , equation produces the series

A pleasing seriees indeed.

Again, with , , , we get

Which seems equally randomply pleasing.

A more excotic series is produced with , , , :

This dauting series can be tamed by dividing the values by and taking the floor, whereby we get:

This is incidentaly is the random number table for the original Doom game. It’s even mentioned in the On-line encyclopedia of integer sequences.

The implementation in Doom is a (very short) lookup table, presumably for performance reasons. It seems it was based on the random function shipped with the Borland C compiler6.

RNG’s are ideal in games, since they are portable and their effects are easier to debug.

That concludes the article, hope you learned something.

-

Fourier Analysis: An Introduction by Elias M. Stein and Rami Shakarchi (2003) ↩

-

Floating-point error mitigation on Wikipedia ↩

-

Mathematical Methods in Large-scale Computing Units in Proceedings of a Second Symposium on Large Scale Digital Calculating Machinery p. 141-146. by D. H. Lehmer (1951) ↩

-

TestU01: A C library for empirical testing of random number generators by L’Ecuyer and R. Simard. (2007) ↩

-

Chapter 3, The art of computer programming. Vol. 2. p. 10–26. by D.E. Knuth (1997) ↩ ↩2

-

Pseudorandom number generator on the Doom Wiki. ↩